May 23, 2025

TechXplore Features IST Research

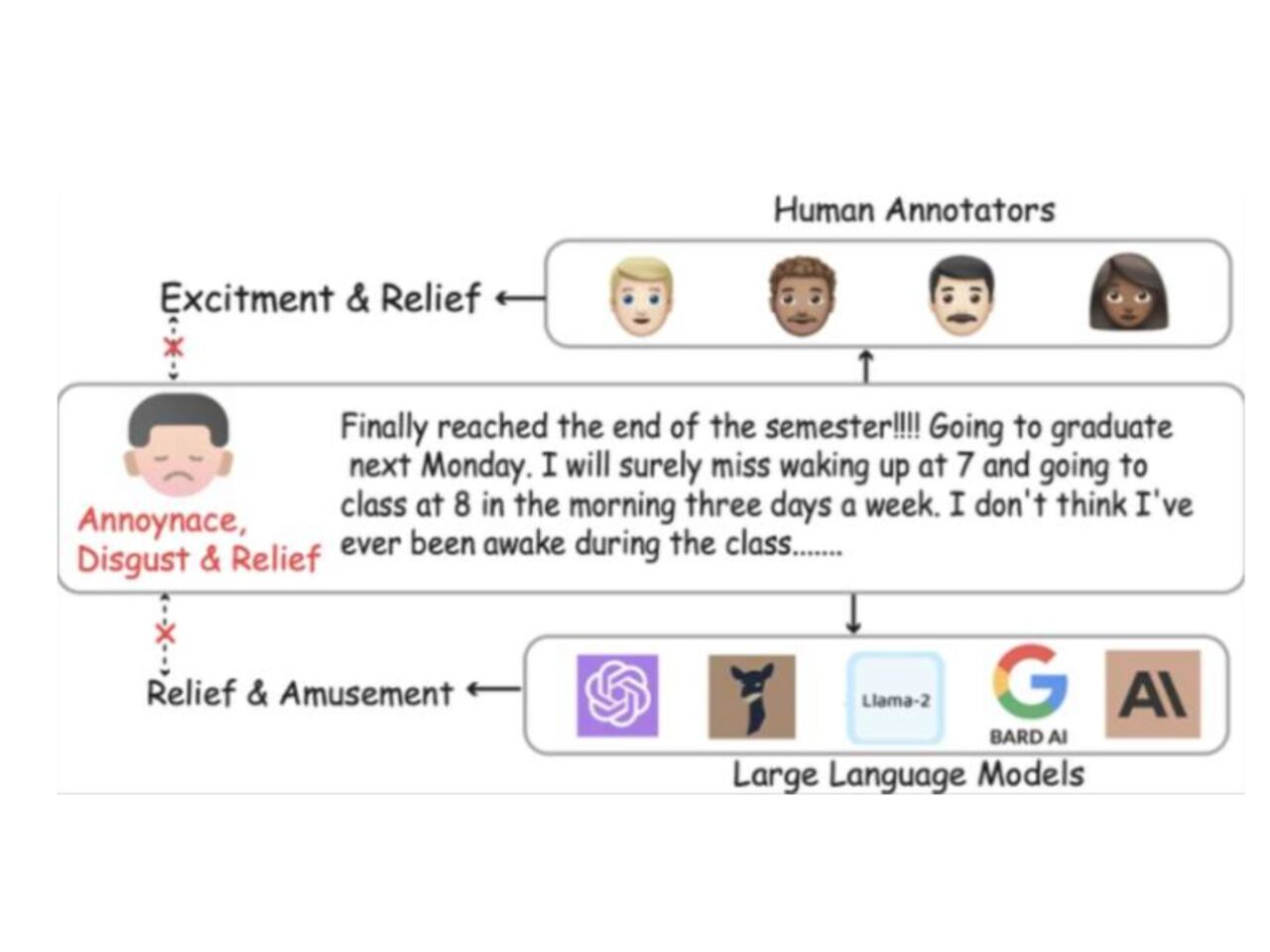

As third-party annotators, are humans or LLMS better at identifying the emotions expressed by others in their written texts?

A recent story in TechXplore, "Third-party data annotators often fail to accurately read the emotions of others, study finds," featured research from the Penn State College of IST.

The paper, "Can Third Parties Read Our Emotions?" was recently accepted to the 63rd Annual Meeting of the Association for Computational Linguistics (ACL 2025), which will take place in Vienna, Austria in July.

The researchers compared the emotions that social media users identified in their own posts with third-party human and LLM-sourced annotations. They found that LLMs outperformed humans at this task nearly across the board.

Jaiyi Li, a graduate student pursing a doctorate in informatics, is first author on the paper. Fellow researchers include Yingfan Zhou, informatics Ph.D. student; Pranav Narayanan Venkit, IST doctoral alumnus; Halima Binte Islam, master of public policy student at Penn State; Sneha Arya, applied data sciences student; Shomir Wilson, associate professor; and Sarah Rajtmajer, assistant professor.